Knowing why

Start by clearly defining your objectives for migrating the pipelines to Nextflow, as this will guide you through the entire migration process. This clarity will help determine the approaches and steps needed to achieve your objectives. Whether you migrate solely to Nextflow or integrate it with the Seqera Platform for management, ensure the workflows are not merely converted but also modified to incorporate critical features like scalability and portability, which likely influenced your decision.

Asking the right questions

You may want to start by asking questions that help put together a technical to-do list. Here are some examples:

- Pipeline Inventory: How many pipelines need to be converted to Nextflow?

- Optimization: Are there any parts of the current pipeline that are rarely used or obsolete? Should these parts be removed during the conversion process?

- Tools Upgrade: Should the tools and dependencies in the current pipeline be upgraded during the conversion, or should they remain as-is?

- Capacity Planning: What is the desired throughput of the Nextflow pipelines in terms of sample processing?

Answering these questions will clarify the scale of the migration and the specific requirements for developing the “new” Nextflow pipelines. Blindly converting the pipelines without addressing these considerations could lead to carrying over outdated practices, technical debt and potentially introducing inefficiencies.

Getting Started

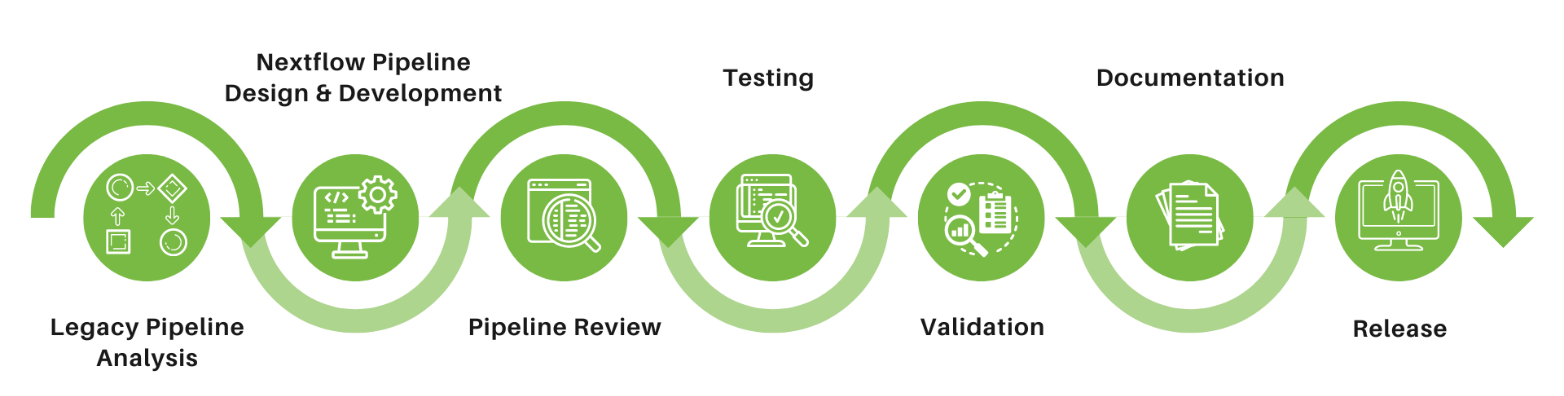

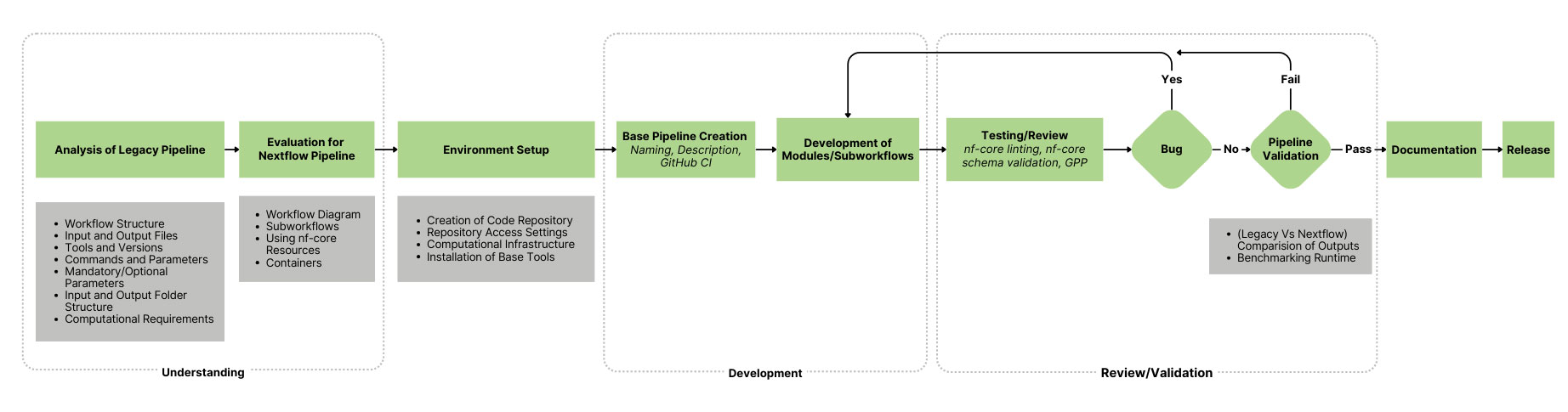

Migrating a pipeline to Nextflow involves a structured approach to ensure a seamless transition. Figure 1 shows Zifo’s comprehensive approach to facilitate migrations.

Figure 1: Zifo’s approach to Nextflow pipeline migration

Step 1. Legacy Pipeline Analysis

Before beginning development tasks, investing time in understanding the current pipeline is crucial. This upfront effort ensures more accurate planning and reduces the need for revisions later on.

Here are some objectives for this step:

| Comprehensive Assessment of the Existing Pipeline | Analyze the Workflow Structure: Understand the sequence of tasks and how data flows through the pipeline and its tools/modules, giving particular importance to any custom scripts. |

| Create a Detailed Workflow Diagram | Inputs and Outputs: Identify the inputs (including databases, reference genomes, etc.) required for each step and the expected outputs.

Tools and versions: Clearly specify each tool used in the workflow, along with the version to ensure compatibility and reproducibility. Commands and Parameters: List the exact commands used in the pipeline, including all parameters. Mandatory Parameters: Highlight which parameters are essential for the pipeline to run correctly. Optional Parameters: Specify optional parameters and their default values, if any. Input and Output Folder Structure: Define a clear structure for input (for samples, reference genomes, etc.) and output directories (e.g., sample-wise or tool-wise). |

| Computational Requirements | Understand the computational requirements of the overall pipeline and individual tools (e.g., input data size, reference data/databases, size of output per sample, file retention, etc.). |

Step 2. Nextflow Pipeline Design & Development

To ensure smooth development in Nextflow, engaging in preparatory tasks such as creating a workflow diagram and conducting evaluations on the parameters mentioned below will be highly beneficial.

| Tools and Versions | Clearly specify each tool to be included in the workflow, along with the desired version. The version could be different from the legacy pipeline based on requirements. In the case of custom scripts, evaluate whether they need to be retained as-is or can be made more efficient using Nextflow operators. |

| Subworkflows | Identify which of the tools can be logically grouped together in a subworkflow. |

| Using nf-core modules/subworkflows | Evaluate which modules/subworkflows are already available in nf-core and can be reused. |

| Docker/Singularity | Identify the Docker/Singularity containers that can be used. If a container is unavailable for a specific version of a tool, create one and identify the appropriate location to host it. |

| Test Data | Identify the test data required for development, review, and testing. This will include minimal test data for initial stages followed by more realistic datasets for testing the robustness of the pipeline. |

Pipeline Development Prerequisites

Before moving to the next steps, here are a few final tasks that need to be handled. This approach ensures consistency and helps manage the migration effectively, especially when dealing with a large-scale migration of pipelines.

| Set-up | Code Repository: Set up a version controlled code repository (e.g.,GitHub).

Computational Infrastructure: Set up a Linux-based computational environment (e.g., on-premises server or AWS EC2 instance). It is useful to have tools like Git, Conda, and Docker permissions set up initially. |

| Project Management | Any chosen project management (PM) tool for tracking the progress of the pipeline development can be set up, such as Jira or even a simple Microsoft Excel. |

| Development/Review Process | A simple process can be defined for developers and reviewers to maintain consistency. This includes establishing naming conventions for Git repository branches, providing comments for commits or pull requests, determining the frequency of commits, and establishing methods to track bugs/enhancements. |

Now that you’ve wrapped up the understanding and preparation tasks, it’s time to set timelines and dive into development!

Figure 2: Pipeline Migration Overview

While there isn’t much to elaborate on regarding development itself, given the wealth of open-source training resources available from Seqera for Nextflow pipeline development and deployment on the Seqera Platform, there are several tailored actions you can take to facilitate the process. For instance, creating various accelerators like templates for project management, documentation, and guidelines for best practices in development and review. These not only ensure consistency but also streamline workflows, minimize duplication, and enhance overall efficiency.

Step 3: Pipeline Review

Conducting a thorough code review ensures high-quality code by maintaining consistent coding styles, enhancing modularity and reusability, and providing clear error-handling messages that are understandable to end-users.

Step 4: Testing

Leverage resources like ‘nf-test’ to write unit tests for Nextflow workflows, implement continuous integration (CI) with GitHub Actions, and test with various parameter combinations to ensure the pipeline functions as expected. Additionally, consider testing with multiple input datasets to evaluate the pipeline’s robustness and gain insights into its computational capabilities, such as the number of samples it can handle and the size of input and output data.

If the Seqera Platform is being used, ensure that the pipeline is deployed and tested on the platform to confirm its compatibility and performance.

After development and multiple review and testing cycles, it’s important to compare your new pipeline with the legacy pipeline to ensure it functions as expected and meets the requirements. This takes us to the next step, Validation.

Step 5. Validation

You can define the methods and parameters for validation, such as comparing outputs, computational metrics and more. These criteria should also depend on the goals you set at the start. It’s a smart move to document all your validation results, including the methods and commands used. This is where having a predefined documentation template can make things much smoother, ensuring consistency and ease of use. This step is especially important in clinical settings, where accuracy and consistency are key.

Validation poses various challenges—while some steps may proceed smoothly, others may present hurdles. For instance, comparing the two pipelines exactly is often hindered by intentional modifications in the new pipeline, such as upgrading tool versions, removing sections/tools, or changing how intermediate files are managed during migration.

Despite these challenges, we can navigate them by conducting thorough reviews for accuracy and documenting them. This ensures that any differences are understood and accounted for, leading to a robust validation of the new pipeline’s functionality.

The final step in preparing new pipelines is the all-important documentation phase.

Step 6. Documentation

In addition to the validation document mentioned earlier, supplementary documentation needs to provide clear instructions on installing and running the pipeline, along with detailed descriptions of each parameter. This will serve as a comprehensive user manual for the pipeline. If the Seqera Platform is used, it is also helpful to include a quick guide on how to launch the pipeline and configure the necessary options before execution.

Rather than rewriting existing open-source documentation, it’s more efficient to provide links to these resources where relevant. Effective documentation should be designed from the user’s perspective, especially for those who may not have prior experience with Nextflow.

Step 7. Ready to Release!

Once validation is successfully completed and all issues are resolved, the pipeline is ready for release. At this stage, it is essential to provide necessary training or documentation to users, monitor the pipeline’s real-time performance, and gather feedback for continuous improvement.

With the right framework in place—from gaining a deep understanding of your current pipeline to meticulous validation and achieving seamless deployment—you’re fully equipped to streamline your processes and confidently embrace the migration journey.

Here’s to successfully converting your legacy pipelines to Nextflow!

If you have any questions or would like support with your pipeline migration or ongoing support, please contact us at info@zifornd.com